Escaping The Containment Unit

The world of AI is evolving so fast that you’d be forgiven for assuming that using this new technology — or even finding it — was easy or convenient. But every model has a different interface, and crucially, a different price point. OpenAI’s ChatGPT costs $20 a month for access to GPT-4 and its API, image creator Midjourney costs anywhere from $10 a month to $60 depending on what you’re doing with it, and audiovisual AI tools like Runway or ElevenLabs have various another price structures.

So it’s not uncommon to encounter a piece of AI content or hear about some new development and simply have no idea how to try it yourself.

Back in March, I wrote about how I was waiting for a company to centralize all of this. To roll out an app that could do all these different processes and work across all devices. Well, it seems like that’s exactly what Microsoft is planning for its newest version of Bing and the Edge browser. Both will soon have a fully-integrated version of GPT-4 and DALL-E 2 running inside of them. I got to try them out at an event in Manhattan yesterday. You can read more about my hands-on test with the app here.

The point is, it’s here. Starting very soon, everyone, for free, will be able to use most of OpenAI’s suite of tools. And, most important of all, the new version of Bing AI is shareable. As in, you can ask it something, send someone a link, and when they open it, they’re inside the AI’s chat interface. It’s important to stop and think about how fundamental of a shift that is.

Right now the sharing loop for AI works like this: You see an AI generation on social media or maybe someone sends you something. It’s either a screenshot of text or an image or maybe, more recently, a video. You can, obviously, share that very easily, but it’s much harder to figure out how to make your own. You have to hunt down the tool that made it and reverse engineer the prompts and workflow. But with Bing AI you can literally just link to the still-active query thread.

I have to imagine Microsoft did the math and realized that they could make more money by opening the floodgates than they could charging users to play with it. And if that math is correct and users like this, the internet is going to shift very quickly as competitors race to catch up.

But even if Microsoft wasn’t knocking down the walls of generative AI and letting everyone come in and muck about, it would have happened anyway because open source AI models are evolving so quickly that I’m genuinely beginning to wonder if the singularity officially started on August 22, 2022, with the launch of Stable Diffusion. And I’m not the only one starting to wonder about this. A few different folks yesterday sent me the leaked “We Have No Moat” document allegedly from a Google employee.

If you haven’t read it yet, you should. I think it’s broadly correct. It argues that open source AI can and will develop faster than anything Google or OpenAI or Meta can make and there’s nothing they can do about it. Though, the googler does make an interesting point. The language model that’s driving the open source AI world right now is a leaked version of Meta’s LLaMA, which means Meta has slightly more influence over where this is all heading than anyone else. Way to go, Zuck, you doomed the entire industry, but you did get the final say in how it goes down in flames.

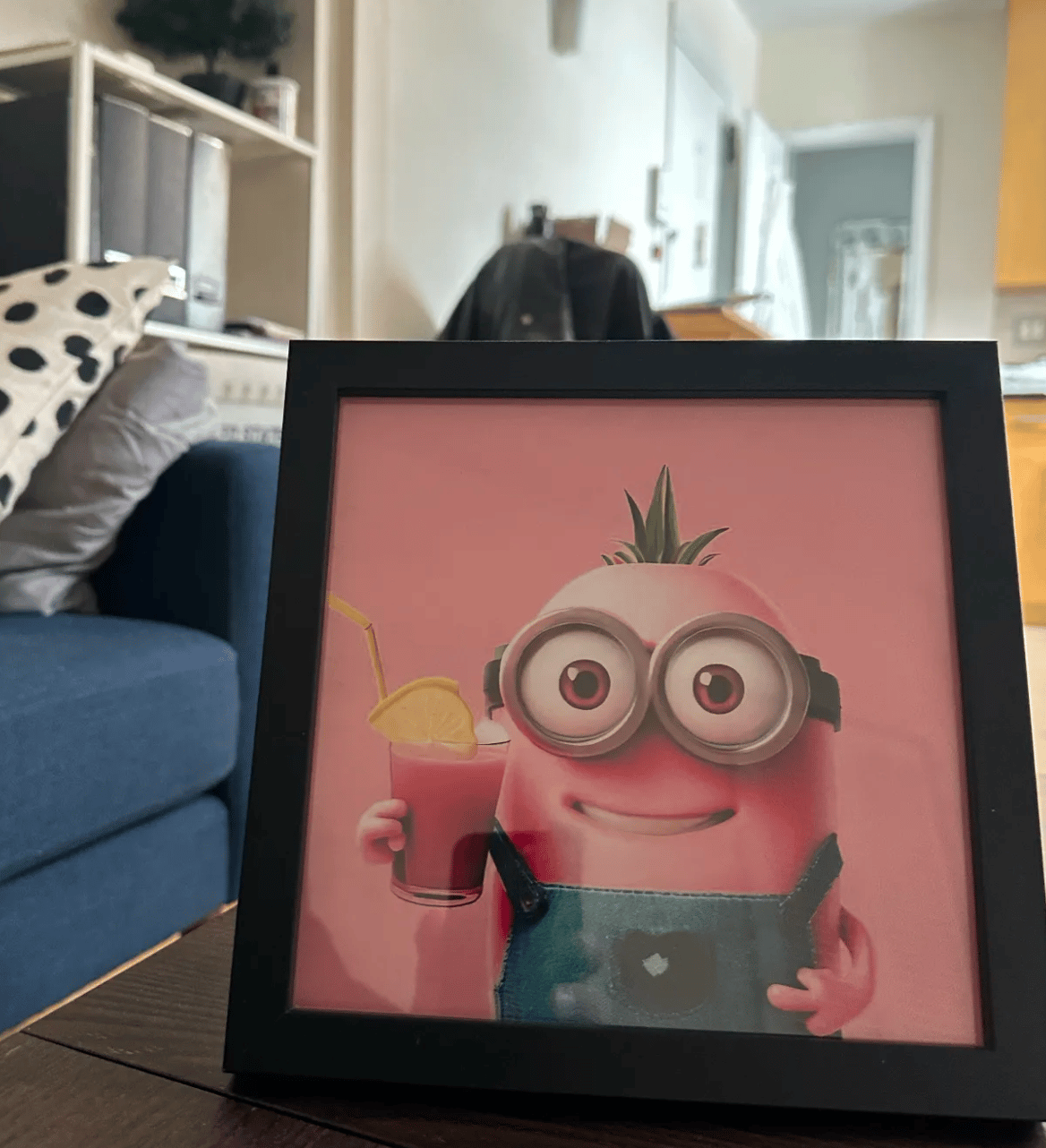

And I’ve recently seen this dichotomy in action — the widening gulf between corporate-owned AI and open source. At the Microsoft event yesterday, there was a station where you could generate images with DALL-E 2. You could then email them to a printer in another room, they’d get printed out, and then be framed for you. I asked it to make a Minion drinking a tropical drink and now it’s sitting on my desk. Very cool. Perfect conversation piece for my apartment.

Meanwhile, on Reddit, a user shared something they made for their “intern project”. It’s a retro-futuristic photo booth running on an old phone switchboard. It takes your photo, feeds it into Stable Diffusion, uses the switchboard to assign different aesthetics to it, and then prints out your new AI-augmented photo. I love my framed Minion, but that’s the coolest shit I’ve ever seen.

And my hunch is we’re going to be feeling that way more and more over the next few months. Yeah, the corporate models will be fun and increasingly easy to use, but some weird thing that someone put on Github will do something that changes the world.

I’ll Be In Europe Next Week!

I’ll be speaking in Hamburg, Germany, next Tuesday at the OMR Festival and I’ll be in performing in Milan, Italy, the next day on Wednesday at Celebrità di internet e studiosi di meme. If you’re at either, definitely say hi!

And think about subscribing to Garbage Day. It’s $5 a month or $45 a year and you get lots of fun extra stuff, including tomorrow’s weekend edition. Hit the green button below to find out more!

How To Talk About Fanfic

I think there’s an art to talking about bad user-generated content. Let’s say you come across a picture of Sonic the Hedgehog drawn with hyper-realistic looking feet and there are stink lines coming out of his feet. Sure, it’s a weird image. It’s weird that somebody made that. Even weirder that other people are enthusiastically sharing it because they can’t get enough of Sonic’s stinky feet.

But someone did make it. And other people are genuinely sharing it. And while I do think it’s ok to acknowledge how weird all of that is, it’s also important to never forget that you are still talking about something someone made. And nine times out of 10, they didn’t make it for monetary gain or fame, but because they just really needed to see Sonic’s stinky feet.

This week, New York Magazine published a piece that did not, I would argue, keep that in mind while discussing Succession fanfic. First, let’s be clear, no one should be writing about Succession, full stop. It’s just WWE for media workers and every article published about it is just more content for content’s sake. But, secondly, the point of New York Mag’s weird piece seems to be, “fanfic of this show exists? A show that is GOOD?” Which, like duh, of course it does, it has two tall white guys in it who wear suits and are friends. Cousin Greg looks like if The Onceler went to business school.

Fanfic writers are rightly furious about the piece, with many expressing shock at how weirdly mean it is. But the point I want to end on here is that when you refuse to learn why something weird is happening online and just stop and gawk at it, you miss what is always a more interesting story. A piece diving into why so many Archive Of Our Own users want to see Cousin Greg go into heat when he sees Tom Wambsgans would be much better! (I’m assuming Greg is the omega in the ship, but I have to spend some time today doing more research.)

No One Cares About Real Podcasts On YouTube

Bloomberg has a great piece out this week detailing how big time podcasts like The New York Times’ The Daily and Slate’s ICYMI are flopping hard on YouTube. Many of Slate’s podcasts have less than 100 views on the platform.

But, first, let’s back up. YouTube over the last few years has arguably become the biggest podcast platform on the web. But those podcasts are largely video podcasts. This has created an interesting content ecosystem, where these huge long shows are clipped down into shorter videos and put on TikTok, which I have to assume, is helping fuel the success of the shows back on YouTube. This is how Andrew Tate was able to dominate TikTok and also why pornstars are making fake podcasts to promote their OnlyFans.

According to Bloomberg, YouTube has been approaching big media companies and asking them to put their shows up on the platform. And they are, but no one’s watching. And the reason “established” podcasts are flopping on YouTube is simple: They weren’t made for YouTube.

YouTube “podcasts” aren’t really podcasts. They’re closer to a daytime talk show. They’re filmed, you can see the hosts, and they rarely have any sort of complicated audio production. They look like podcasts in the same way The View looks like a stage play. You build on the visual aesthetic of the previous medium because the audience knows it.

So, yeah, none of these shows are going to do well on YouTube because they are podcasts and YouTube podcasts aren’t podcasts. Get it?

What Do Venture Capitalists Actually Do?

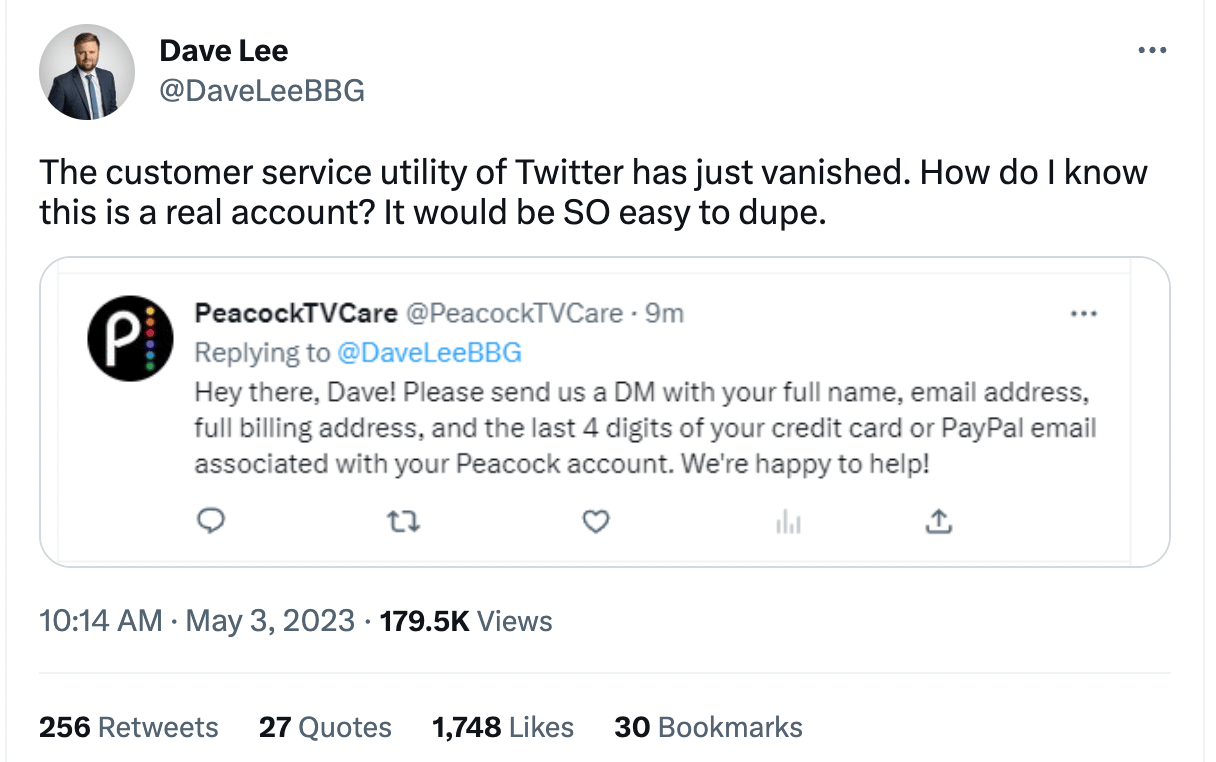

Don’t Talk To Brands On Twitter Anymore

I imagine this is going to be tough for folks to process, but it’s probably time to start treating Twitter as a not-safe space now. Even though some brands and institutions are verified with the gold piss checkmark, many aren’t. And that has made the entire site really difficult to navigate. If you were ever a person who used the site to talk to a customer service rep, I would cut that shit out right now. Honestly, based on how shaky it is as an app, I’m not even really comfortable using DMs anymore.

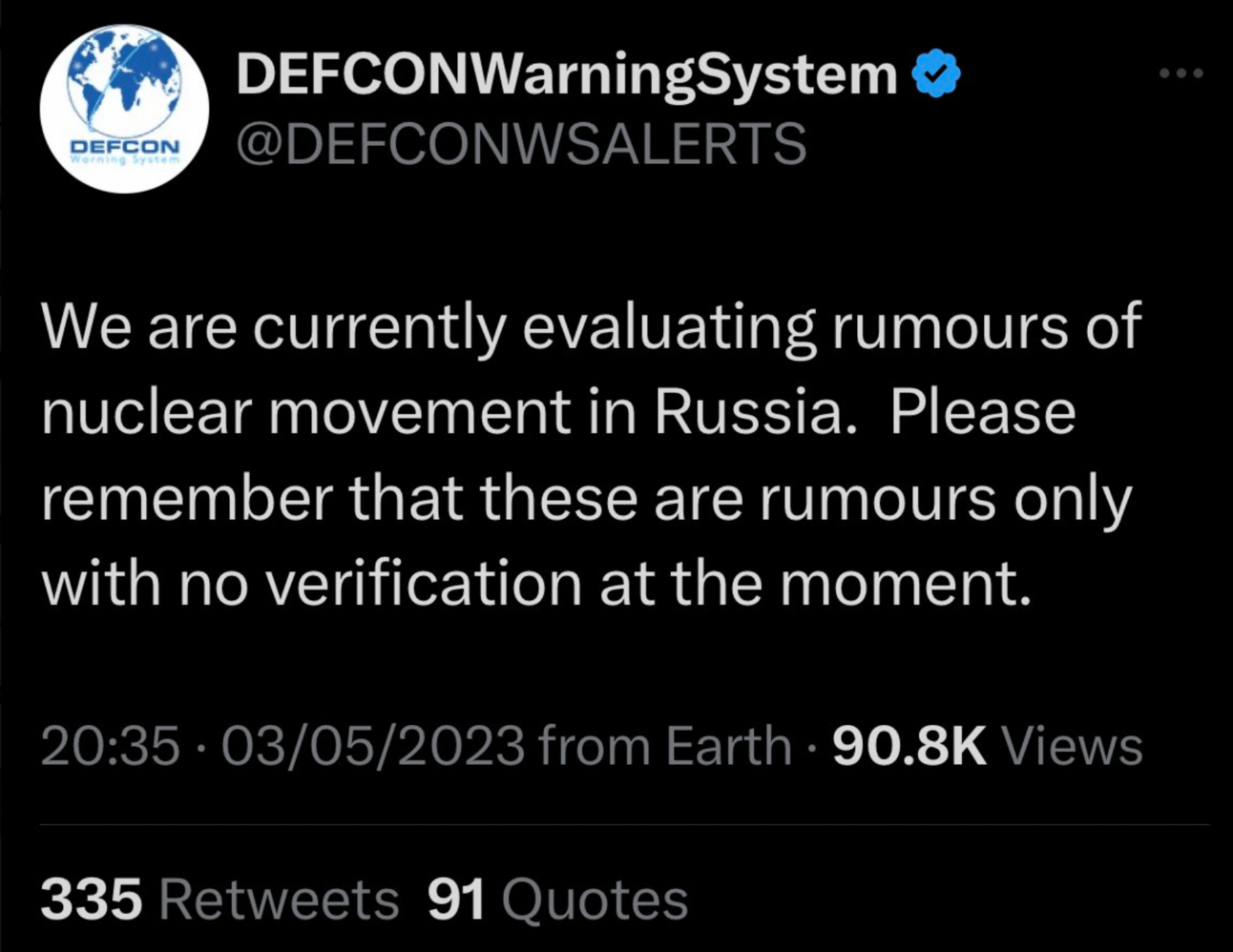

Making things even more confusing, the people who are verified now are the dumbest, least interesting people on the planet. And two days ago, many of them started tweeting that Russia was going to fire a nuke at Ukraine. Very cool!

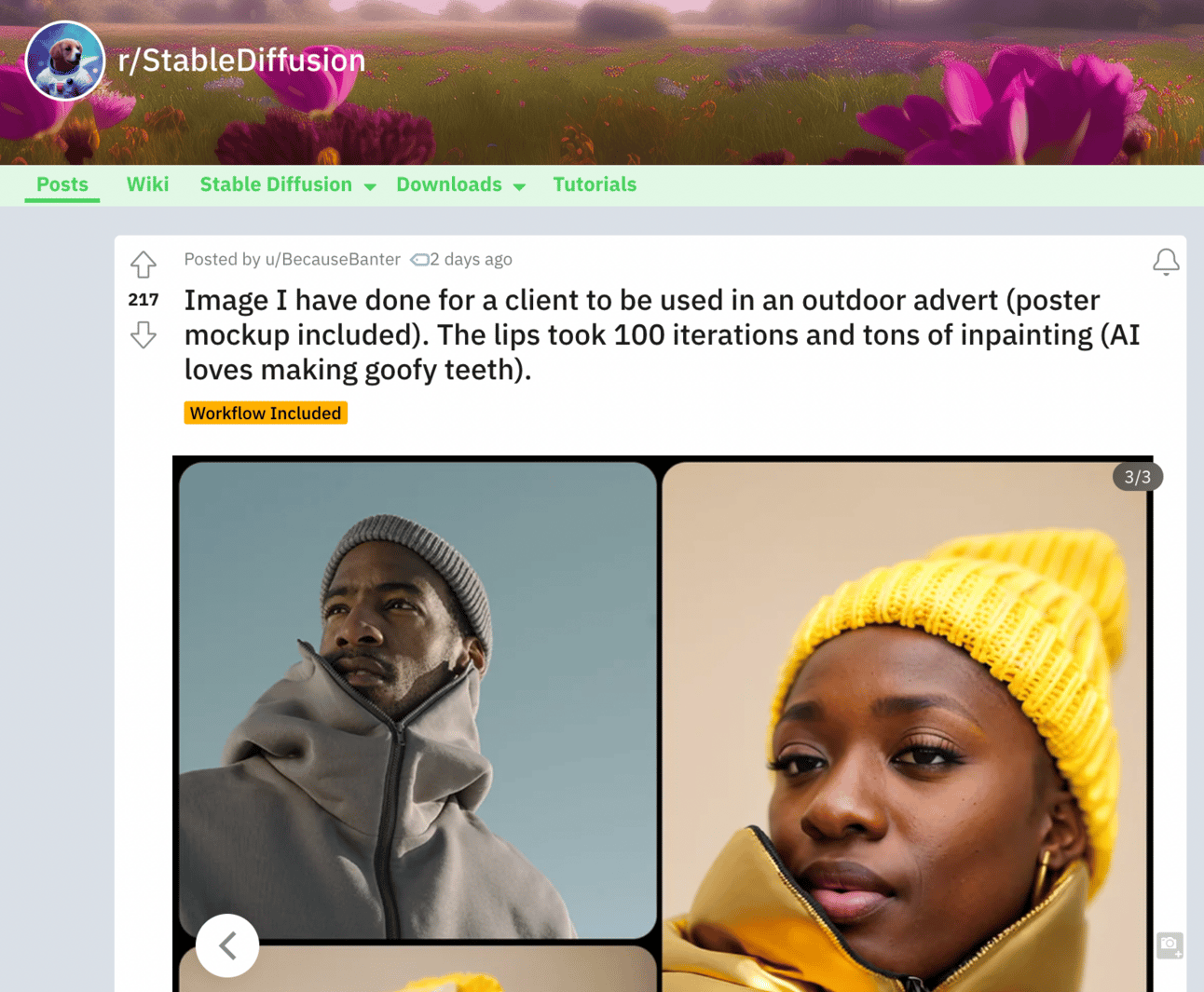

What A Professional AI Workflow Looks Like

A redditor broke down how they recently used Stable Diffusion to make a billboard ad and it’s fascinating (and more than a little unnerving). Here’s how they did it.

First, they took a creative commons photo of a man looking to the left (pictured above) and used it as the input photo. Stable Diffusion scanned the general features of the photo using a tool called ControlNet and then the redditor put a prompt in. The AI combined the details from the prompt with the features of the input photo and combined them. The redditor said they then used a process called inpainting to do touchups on small details. Inpainting allows you to instruct the AI to generate new sections of the image in the same way an app like Photoshop allows you to paint colors with a digital brush.

What I think is so interesting about this process is that it is, effectively, the same way you would use a stock photo in an ad campaign without AI. Go find a picture you like, alter it to taste, and there you go. But with the use of Stable Diffusion the alterations that are possible are so far beyond anything you could do with Photoshop that it’s hard to conceptualize it — and they take a fraction of the time.

Look At This Big Sleepy Guy

(Tumblr mirror for folks in non-TikTok regions.)

Some Stray Links

P.S. here’s Linkin Park's Hybrid Theory but with a Pokémon soundfont.

***Any typos in this email are on purpose actually***